There’s no shortage of enthusiasm around agentic AI right now — and for good reason. It’s changing how we think about technology’s role in decision-making, autonomy, and execution. But amid the excitement, one truth often gets lost: not every problem should be solved by an agent.

At Aquant, we face this reality every day. Our product and R&D teams are constantly asked to “build an agent” — to automate a process, make something smarter, and simplify a workflow. Yet when we step back and ask the right questions, we often find that what’s being requested isn’t truly agentic. Forcing AI into the wrong use case can create more problems than it solves.

That’s why we built something new: Aquant’s Agent Evaluator — an internal diagnostic tool that helps us determine whether a use case truly qualifies as agentic AI, or if it’s better handled through traditional SaaS, automation, or another capability entirely.

What started as an internal exercise in clarity and discipline quickly proved valuable beyond our team. The same framework can help IT leaders everywhere navigate AI decisions more confidently and with less risk.

The Problem with Building for “AI’s Sake”

AI has entered what I call the pressure phase. Every CIO and IT leader is expected to drive transformation quickly — while protecting budgets, managing risk, and proving ROI.

When your team is eager to innovate, the temptation is to label everything as an AI project. But not all challenges require autonomy, reasoning, or self-directed action. Some problems simply need better workflows, cleaner data, or a stronger user experience.

The danger lies in over-engineering — chasing hype instead of impact — and spending valuable resources on ideas that sound exciting but don’t deliver business value.

Our Agent Evaluator helps us avoid that trap. It provides a structured, objective way to separate signal from noise — to focus on problems that truly benefit from agentic AI and skip the ones that don’t.

Inside the Diagnostic Framework

Aquant’s Agent Evaluator assesses each proposed use case across six key architectural dimensions to determine whether it qualifies as an agent — and what type of system design best fits.

Autonomy vs. Direction:

Should your system proactively take action or wait for explicit user commands?

Example: AI scheduling assistant vs. manual calendar interface.

Ambiguity vs. Specificity:

How well does your system need to handle unclear, natural language inputs?

Example: Conversational search vs. structured filters.

Evolution vs. Stability:

Should your system learn and adapt over time, or maintain predictable, rule-based behavior?

Example: Self-improving recommendations vs. static workflows.

Personalization vs. Generalization:

Does your system need to adapt uniquely to each user or perform uniformly for everyone?

Example: Personalized AI assistant vs. standardized workflow tool.

Visual vs. Conversational:

How do users prefer to interact — through a visual interface or natural language conversation?

Example: Traditional GUI vs. chat-like interface.

Composable vs. Standalone:

Should your system integrate into existing tools or operate as a complete, independent product?

Example: API-first service vs. full platform.

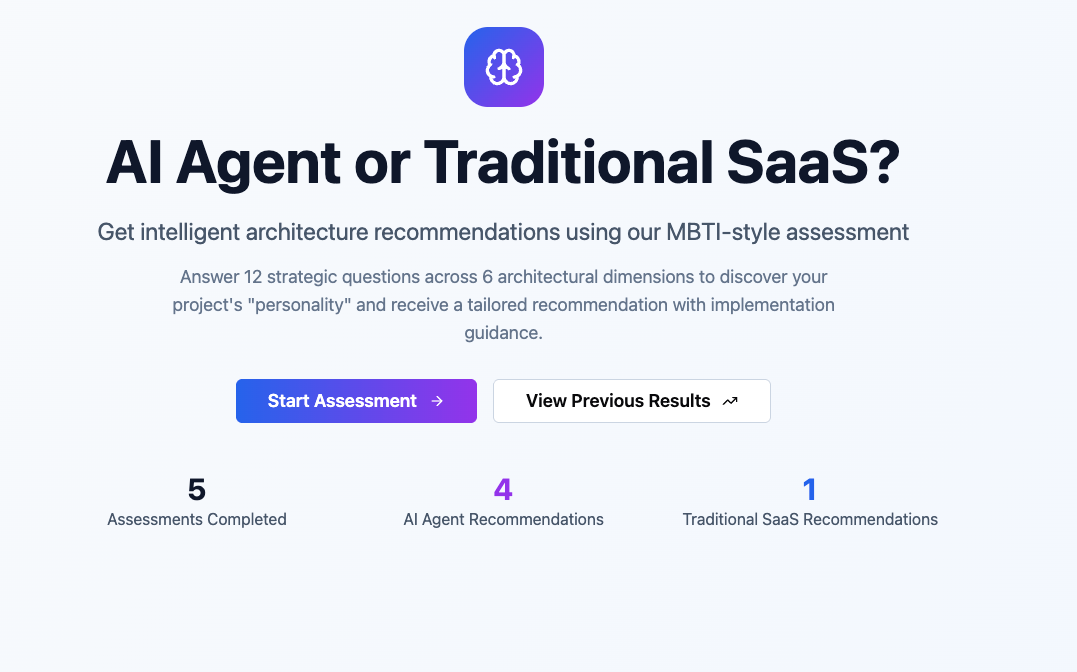

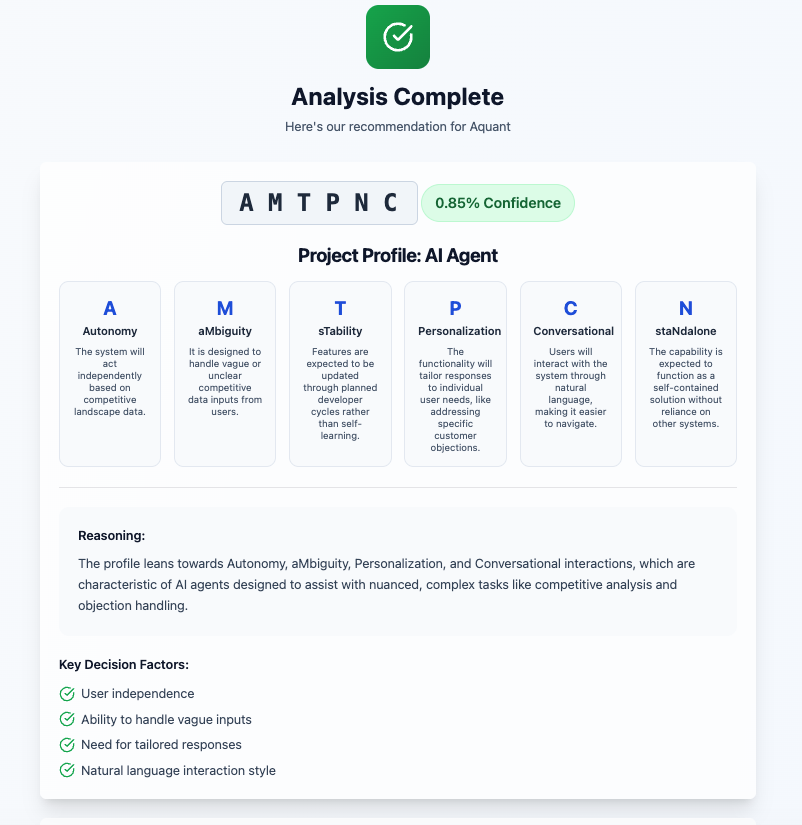

After answering 12 guided questions, Aquant’s AI generates a six-letter “project personality” profile — similar to a Myers-Briggs test — along with a recommendation that includes reasoning, key influencing factors, and implementation guidance.

This level of clarity helps our teams prioritize work, allocate resources effectively, and focus on building technology that drives measurable outcomes, not just “AI for AI’s sake.”

How It Has Changed Our Product Development

Example 1: When “Agentic” Wasn’t the Answer

One project began as an initiative to build an AI agent to manage service documentation — identifying the correct form for each case, filling in relevant details, and exporting a completed version back into the system. At first, it sounded like a perfect fit for an intelligent, context-aware agent that could automate repetitive steps.

But once we ran it through the Agent Evaluator, it became clear that the process didn’t require autonomy or reasoning — it followed a rigid, rule-based structure. The system simply needed to retrieve the correct form through an API, pre-fill it with data, and allow the user to review and submit it.

By reframing the project as a traditional SaaS workflow, we reduced complexity, improved maintainability, and achieved the same productivity gains — without over-engineering the solution. The lesson: not every “smart” problem needs an agent to solve it.

Example 2: When True Agentic AI Delivered Breakthrough Results

A strong example of a successful agentic use case emerged in our work on an AI-powered competitive analysis and objection-handling system for enterprise sales teams.

This agent autonomously gathers and interprets market intelligence from multiple sources, synthesizes insights about competitors, and dynamically generates tailored objection-handling strategies for account executives. Unlike static SaaS tools, it operates independently — interpreting vague or incomplete prompts, adapting to each situation, and maintaining conversational context.

Account executives can ask, “How should I respond if the customer mentions a lower-cost competitor?” and receive personalized, real-time guidance. Behind the scenes, multi-agent orchestration coordinates data retrieval, reasoning, and natural language response generation.

Since deployment, this system has reduced sales preparation time and improved win rates, showing how autonomous, goal-driven AI can amplify human decision-making and deliver measurable business value.

What IT Leaders Can Take Away

For IT leaders, this approach offers a practical path to responsible AI adoption. Agentic AI introduces new challenges — from model drift and data dependencies to ethical considerations and unpredictable behavior. Knowing when and where to deploy it matters more than ever.

Choosing the “safe” option rarely drives transformation, but taking an uncalculated AI risk can erode credibility if it fails. Frameworks like the Agent Evaluator don’t restrict innovation — they make it sustainable, explainable, and safe.

By using a structured diagnostic process, IT leaders can:

- Focus resources on high-impact, low-risk opportunities

- Build alignment and trust across teams

- Avoid “AI theater,” where solutions sound advanced but deliver little value

- Accelerate time-to-value by selecting use cases that are truly AI-ready

The Future of Responsible AI Innovation

As agentic AI becomes more capable, discernment will define leadership. The next wave of innovation won’t come from those who build agents the fastest — but from those who know when not to.

At Aquant, our Agent Evaluator reflects that philosophy. It helps us reduce risk, stay focused, and ensure every AI initiative is grounded in business reality.

The future of AI won’t be defined only by what we can build — but by the wisdom to know what we shouldn’t.