Why Conversational AI Is the Next Frontier for Field Service

Retrieval-Augmented Generation (RAG) became the first practical method for applying generative AI—powered by large language models (LLMs)—within enterprise workflows. It grounds every answer in a live document so hallucinations drop and auditors can click the source. But RAG is still one-shot intelligence: you ask, it answers, full stop.

Real-world service and support workflows seldom end in one volley. Diagnostics, troubleshooting and compliance reviews are inherently conversational:

“Which firmware are you on?”

“Did tightening the bolts clear the sensor fault?”

“Let’s confirm the client’s region before we quote that regulation.”

A system that cannot probe, remember and confirm leaves humans to stitch the gaps. Aquant’s Retrieval-Augmented Conversation (RAC) design wraps the same retrieval engine inside a multi-turn, memory-aware loop that keeps asking, keeps learning and keeps refining until the job is done—or hands off to a human with full context.

The sections below explain RAG’s value, diagnose its limitations, introduce Aquant RAC, illustrate it in action, lay out the reference architecture Aquant deploys in production, walk through a staged rollout on the Aquant platform, and—new in this edition—show how RAC unlocks true multichannel AI across web, mobile, voice and collaboration tools.

Understanding RAG: How Retrieval-Augmented Generation Delivers Fast, Trustworthy Answers

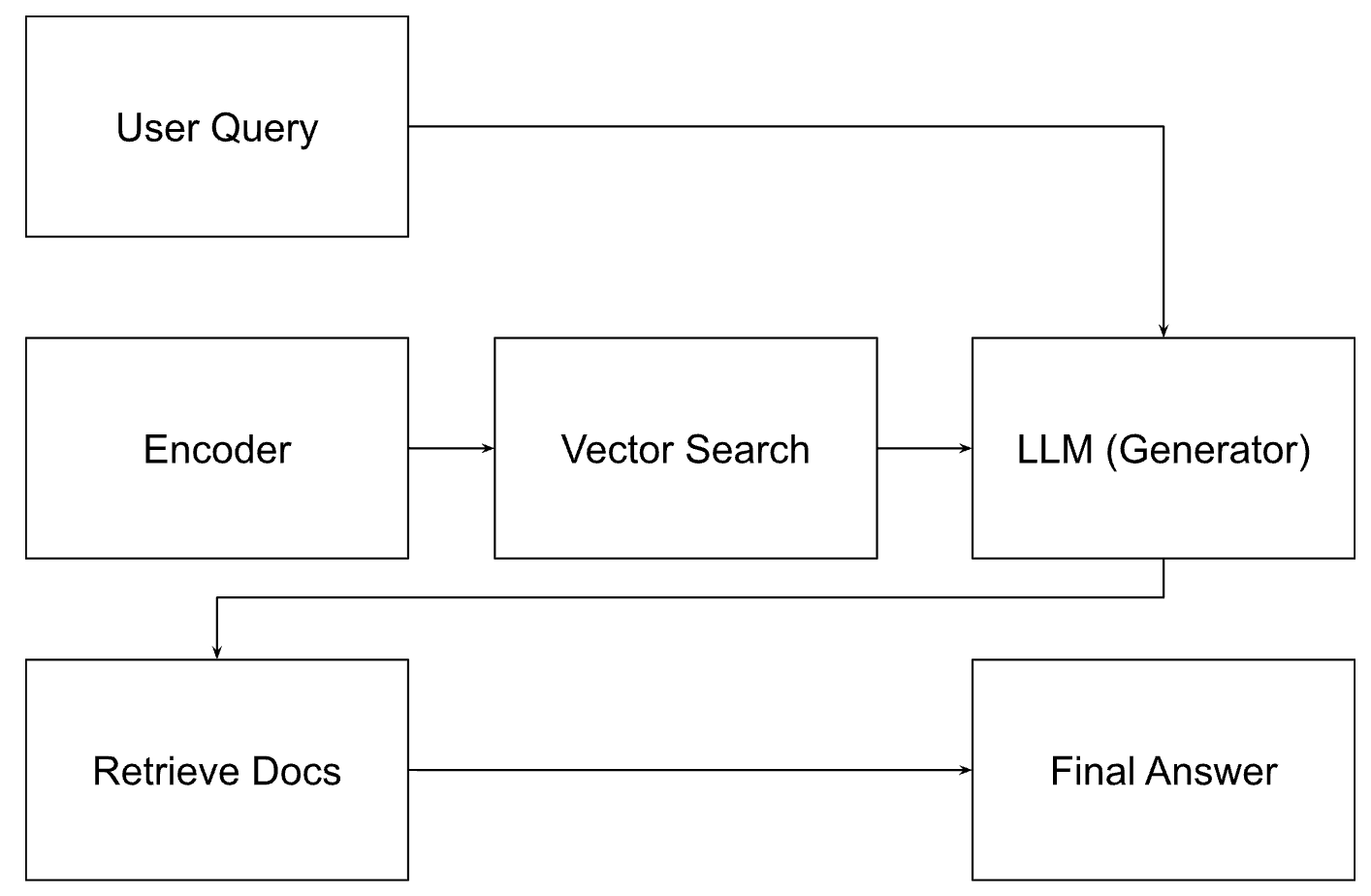

1.1 How Retrieval-Augmented Generation (RAG) Works—Explained Simply

Imagine walking into a factory’s expert tool room. A technician says:

“I’m about to service the hydraulic press—what torque spec do I need?”

The tool clerk:

- Heads straight to the drawer with specs for that family of machines

- Flips to the exact sheet for the technician’s configuration

- Reads off the torque requirement and jots it down

- Hands over both the note and the reference so the tech can double-check

That’s RAG—in human form. In software, it works like this:

1.2 Four Proven Benefits of RAG That Win Over CIOs

1.3 Best-Fit Use Cases for RAG: When a Quick Answer Is All You Need

RAG is a perfect fit when users just want accurate information—fast. A few examples:

- Error code lookup: A field tech sees a common HVAC error code. RAG instantly explains what it means.

- Diagram retrieval: A telecom engineer asks for a router wiring diagram. RAG surfaces the exact configuration.

- Calibration help: A maintenance worker needs to verify steps to calibrate a pressure sensor. RAG delivers the procedure.

In each case, the goal is the same: “Just tell me what I need to know.” Once the answer arrives, the job is done.

2. The Limits of One-Shot AI: Why RAG Falls Short in Complex, Outcome-Driven Workflows

Retrieval-Augmented Generation (RAG) delivers fast, grounded answers. But in real-world service and support environments, success isn’t measured by how good the answer looks—it’s measured by whether the problem gets solved.

Here’s where RAG falls short when outcomes matter most.

2.1 Ambiguity: When RAG Doesn’t Know What It Doesn’t Know

A field technician types:

“Pump 12 overheating—advice?”

But critical context is missing:

- What’s the fluid type?

- What’s the inlet temperature?

- What’s the pump’s maintenance history?

RAG doesn’t ask. It just retrieves and responds. The tech may get an answer—one that sounds confident—but it’s likely incomplete or incorrect.

And there’s no chance for the system to clarify:

“What’s the current coolant pressure?”

Without that follow-up, RAG can’t bridge the gap between symptom and solution.

2.2 RAG’s Conversation Blind Spot: No Follow-Ups, No Fixes

Most troubleshooting is inherently interactive. Humans solve problems by probing:

“Do you hear a humming noise?”

“Which firmware version are you running?”

“Have you tried a manual reset?”

But RAG doesn’t ask questions—it just answers once and stops. If that answer doesn’t resolve the issue, users are left to rephrase and try again.

The result? Friction. And frustrated teams who quickly lose trust in the tool.

2.3 RAG Isn’t Outcome-Aware: It Doesn’t Know If the Problem Is Solved

RAG’s success metric is simple: return a well-written answer.

But businesses care about:

- MTTR – Mean Time to Repair

- FTF – First-Time Fix Rate

- CSAT – Customer Satisfaction

A great-sounding paragraph that fails to resolve the issue? It doesn’t move any of those needles.

RAG doesn’t check if the outcome was achieved—because it wasn’t designed to.

2.4 RAG Can’t Access the Real Answers—Because They’re Not in the Docs

Many mission-critical answers don’t live in PDFs. They live in:

- ERP systems

- Job history records

- Sensor feeds

- Asset maintenance logs

A technician might ask:

“Can I get this part now?”

“Was this issue fixed last time?”

RAG, restricted to static documents, can only guess. Answers may sound convincing but lack operational value—because they miss the real data that drives the right decision.

2.5 Real-World Risks of RAG-Only Workflows

When RAG operates without follow-ups or access to real-time context, the downstream consequences add up fast.

Bottom Line: RAG delivers static answers. But support, diagnostics, and compliance are dynamic. Without the ability to ask, clarify, and confirm—RAG falls short.

3. Introducing RAC: How Retrieval-Augmented Conversation Drives Real Outcomes

RAG gives you a one-shot answer. But real-world service and support problems are messy. They require back-and-forth, context, and clarification.

That’s where Retrieval-Augmented Conversation (RAC) comes in. It combines the grounding power of RAG with multi-turn dialogue and persistent memory—closing the gap between question and resolution.

3.1 From Answer to Action: Upgrading the Tool Room Clerk with RAC

Let’s return to our tool-room analogy.

Now imagine a conversation coach stands beside the clerk.

When the technician’s request is vague, the coach nudges:

“Which press model are you working on? What symptoms are you seeing?”

If the first reference doesn’t solve the issue, the coach digs deeper:

“Did you already bleed the hydraulic line? Let’s check the troubleshooting bulletin.”

And when the tech says, “Still jammed,” the coach keeps going:

“Try this. Let’s rule out that sensor next.”

This loop—clarify → retrieve → guide—continues until:

- The issue is resolved, or

- A senior engineer takes over, fully briefed

That’s what RAC does: wraps each retrieval in a smart, memory-aware conversation that actually drives outcomes.

3.2 Inside the RAC Loop: How Aquant’s AI Reaches Resolution Step-by-Step

RAC replaces static Q&A with an intelligent, iterative loop—blending retrieval and reasoning across every turn.

Each turn builds context. Each answer gets smarter. And the user moves closer to resolution.

3.3 Why Dialogue Is Essential to Solving Complex Problems

Complex service issues rarely resolve in a single step.

They require a process of:

- Asking clarifying questions

- Verifying next steps

- Confirming outcomes

RAC does this naturally. It asks things like:

“Did that fix the issue?”

“What’s the part number on the unit?”

“Are you hearing a click or a hum?”

Each question narrows the possible cause. In equipment diagnostics, this iterative narrowing—from vague symptom to precise fix—is critical.

Expecting a single-shot answer to handle this complexity is not only unrealistic—it’s a recipe for escalation and delay.

RAC Adapts to Your Team

The RAC loop also adjusts based on the persona interacting with it:

- A junior tech might get more step-by-step guidance.

- A field veteran sees streamlined suggestions.

- A call center agent receives co-piloted probing and next-best actions.

RAC learns how much help each user needs—and adapts in real time.

RAC as a Coach, Not Just a Bot

With RAG, users get an answer. If it works, great. If not—they’re stuck.

But RAC acts like a diagnostic coach.

It teaches users:

- What questions to ask

- How to think through a problem

- Why a solution works

The result?

- Faster onboarding

- Smarter technicians

- Fewer avoidable escalations

3.4 RAG vs. RAC: A Side-by-Side Comparison for Enterprise Service Teams

3.5 Feeding RAC Better Data = Better Decisions

Most RAG systems retrieve from static documents only. But RAC can handle far more—if it’s fed the right data.

Say a tech asks: “Which part should I swap?”

RAC can weigh:

- Price differences

- Parts availability

- Remote repair options

- Common fix success rates

By augmenting the retriever with parts catalogs, cost/time data, fix history, and field insights, RAC becomes decision-aware, not just document-aware.

This unlocks the most efficient, cost-effective, and field-ready solution—not just the first plausible one.

4. Real-World RAC Scenarios: From Field Techs to Customer Support

In complex, outcome-driven environments, a single-shot answer is rarely enough. RAC shines in these scenarios—driving resolution by guiding users through every step of the process.

Below are real examples that show how RAC delivers results across field service, telecom, healthcare, legal, and IT support.

4.1 Field Service Example: Diagnosing a Turbine Alarm

Outcome: Problem resolved with a first-time fix. RAC guided the process step-by-step, captured the resolution, and fed insights into analytics—no escalation needed.

4.2 Telecom Example: Resolving Wi-Fi Dropouts

Outcome: No agent needed. RAC pinpointed the issue, initiated a fix, verified resolution, and completed the loop—all through conversation.

4.3 Additional RAC Use Cases Across Industries

Healthcare Triage

- RAC-bot asks for symptom duration, severity, and current medications

- Retrieves evidence-based triage protocols tailored to patient profile

Legal Drafting

- RAC-bot confirms jurisdiction (“Is this for the US or EU?”)

- Surfaces region-specific statutes and prevents cross-border citation errors

Internal IT Help Desk

- RAC collects OS version and error code

- Retrieves patch instructions, verifies reboot success

- Average handling time cut in half

Outcome: RAC adapts to any industry where resolution depends on clarifying details and guiding users to the right next step.

Whether it’s diagnosing turbine alarms, patching routers, triaging health symptoms, or fixing laptops—RAC turns multi-step problems into resolved tickets.

5. Inside the Aquant Platform: RAC Reference Architecture Explained

RAC isn’t just a concept—it’s live and running across some of the world’s most complex service organizations. And it doesn’t require stitching together multiple tools or vendor ecosystems.

Aquant’s full RAC loop is built into its platform—allowing fast, scalable deployment with no outside orchestration.

Here’s how it works under the hood.

RAC Reference Architecture

Fully Integrated. No Vendor Lock-In.

Unlike most enterprise AI deployments, RAC doesn’t depend on external orchestration platforms or custom integration layers.

Everything runs on the Aquant platform.

No additional vendors. No fragile pipelines. No separate tools to train, monitor, or govern.

From retrieval and generation to memory and escalation, Aquant delivers the full RAC stack—ready for production.

6. How to Deploy RAC: A Step-by-Step Playbook for Success

RAC is designed for fast rollout and measurable results. Aquant customers move from prototype to production using a clear, structured playbook—without needing extensive model training or orchestration layers.

Here’s how to deploy RAC on the Aquant platform for immediate business value.

Stage 0 – Choose the Right Use Case

Start with one outcome-heavy workflow.

- Example: Compressor over-temperature alarms

- Identify high-impact, high-friction areas where resolution speed matters most

- Baseline key KPIs:

- MTTR (Mean Time to Repair)

- FTF (First-Time Fix Rate)

- CSAT (Customer Satisfaction)

Why it matters: This gives you a measurable before-and-after snapshot to prove RAC’s value.

Stage 1 – Clean and Curate Your Knowledge

Ensure your knowledge base is ready for retrieval.

- Ingest structured and unstructured data via Aquant’s pipeline:

- PDFs

- HTML

- CRM and service history data

- Deduplicate content and tag with relevant metadata

- Automatically flag obsolete SOPs for archiving

Why it matters: Cleaner data = sharper answers = faster resolutions.

Stage 2 – Prototype Your First RAC Skill

Test the RAC loop in a controlled sandbox.

- Use the Aquant Console to:

- Create a RAC Skill

- Fine-tune prompts based on your business language and KPIs

- Run real queries with synthetic or test users

Why it matters: A working prototype builds internal alignment and ensures accuracy before going live.

Stage 3 – Controlled Launch with Agent Assist

Deploy RAC with human-in-the-loop guardrails.

- Enable the Agent-Assist Overlay:

- RAC makes the suggestion

- Human agents review/edit before responding

- Monitor:

- Containment rate

- MTTR improvement

- Agent satisfaction

Why it matters: This step builds trust—agents learn RAC’s value while still owning the customer experience.

Stage 4 – Enable Autonomous Mode

Let RAC auto-resolve issues that meet performance thresholds.

- Activate Auto-Resolve for Tier-1 categories where:

- Containment ≥ 80%

- CSAT ≥ 4.5

- Human escalation path remains in place for exceptions

Why it matters: This is where the real ROI kicks in—cut resolution times, reduce escalations, and boost CSAT.

Stage 5 – Drive Continuous Improvement

Use real interactions to train and optimize RAC.

- Surface low-confidence responses via Aquant Insight Dashboards

- Retrain embeddings or tweak prompts with one-click retraining

- Run prompt A/B tests directly in the console

- Control costs with built-in model caching and batching

Why it matters: Every conversation becomes a feedback loop. RAC gets smarter over time—with zero model retraining.

Built-In Governance and Compliance

Security is embedded, not bolted on.

- Role-based access controls (RBAC)

- PII redaction

- GDPR-compliant data retention

- On-demand audit exports via Aquant Console

Why it matters: You can deploy RAC confidently in regulated or sensitive environments.

From day one to self-sufficiency, RAC follows a proven, repeatable path to value.

Whether you’re solving service tickets, triaging support issues, or enabling field techs, this playbook scales with you.

7. Unlocking Multichannel AI with RAC: One Engine, Every Channel

RAC is designed to meet users where they are—whether that’s in a headset on the factory floor, inside a web app, or in a WhatsApp chat at 2 a.m.

Because RAC is inherently conversation-native, it maintains full context across platforms, learns from every interaction, and drives toward resolution—no matter the interface.

7.1 Conversation Everywhere: How RAC Works Across All Interfaces

RAC supports seamless communication across every modern support channel—ensuring consistent guidance, persistent memory, and instant access.

Key Takeaway: RAC delivers consistent, contextual guidance—regardless of how or where the conversation starts.

7.2 Why Multichannel Matters: Business Impact Beyond Convenience

True multichannel support isn’t just about flexibility—it’s about enabling smarter, faster service across every touchpoint. RAC delivers real business value in every scenario.

7.3 How Aquant Makes It Work

Aquant’s multichannel architecture is built for speed, scale, and enterprise-grade governance—out of the box.

- Unified Conversation Gateway

One WebSocket and one telephony adapter feed all channels into the Dialogue Manager - Channel-Aware Prompts

RAC auto-formats output for each medium (Markdown, plain text, or SSML for voice) - Secure Hand-Offs

Channel tokens map to enterprise identities, maintaining role-based permissions across platforms - Analytics Convergence

Every message, audio exchange, and escalation flows into a single transcript lake—allowing you to analyze performance across every surface

Bottom Line: RAC offers one reasoning engine, one memory layer, and one feedback loop—deployed across every interface your team or customers already use.

8. How RAC Adds Value at Every Stage of the Service Lifecycle

RAG helps users get an answer. RAC helps teams get results.

That core difference unlocks measurable value across every phase of the service experience—from self-service to workforce development.

Here’s how RAC transforms each stage:

Side-by-Side Comparison: RAG vs. RAC Across the Service Lifecycle

Why It Matters: RAC doesn’t just power faster fixes—it powers smarter operations.

Every micro-interaction becomes a data point that sharpens your service, improves technician performance, and boosts long-term ROI.

Closing Thoughts: Turning Answers Into Outcomes

RAG was step one. It made enterprise knowledge searchable and answers more reliable. But answers alone don’t drive outcomes—action does.

RAC is step two. It replicates the probing, clarifying, and iterative reasoning of your most experienced technicians—at scale, across channels, and 24/7.

If you take just three steps after reading this:

1. Audit an outcome-heavy workflow

Pick a real use case where success is measured by resolution, not just information.

How many back-and-forths does it typically take a human to solve it?

2. Prototype RAC with real data

Use a narrow but meaningful knowledge set.

Run baseline metrics—MTTR, FTF, CSAT—before you touch a line of code.

3. Prove success, then scale wisely

RAC isn’t a chatbot. It’s a discipline: retrieval, reasoning, questioning, confirmation.

Start in one domain. Then copy-paste the loop across your service organization.

Welcome to the conversational era of enterprise AI.

Aquant’s RAC blueprint is already in production. The next move is yours.

About the Author

Indresh Satyanarayana, VP of Product Technology & Labs at Aquant

Indresh Satyanarayana is a B2B SaaS veteran with over 20 years of experience, including 15 years in field service management. He has held technology leadership roles at SAP and ServiceMax, where he was Chief Architect. Now at Aquant, he leads the Innovation Labs team, bridging market trends, emerging tech, and customer needs.

.png)